Autoencoders as Classifiers

Exploring Autoencoders as classifiers and other things

T.O.C

Introduction

What is an autoencoder ?

Building some variants in Keras

Pretraining and Classification using Autoencoders on MNIST

Introduction

Autoencoders are a special case of neural networks,the intuition behind them is actually very beautiful

The idea behind autoencoders is actually very simple, think of any object a table for example .

The idea behind autoencoders is actually very simple, think of any object a table for example .

Now to describe a table you can use as much features as you like It has 4 or more legs,it’s made of wood ,it’s rounded sometimes square,sometimes an ellipse … now a more compressed description can be : It’s a smooth surface standing on 3 or more legs .

The idea is that can we learn a lower dimenisional,simpler representation of our data ?

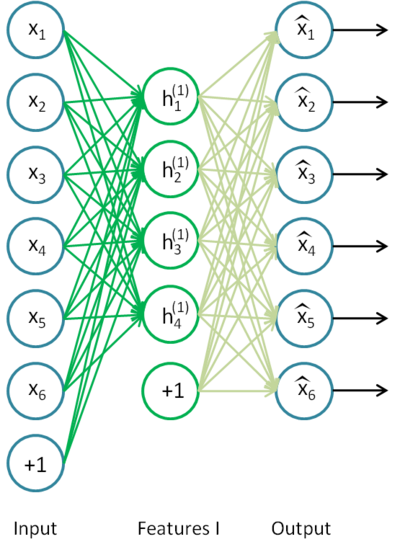

Training autoencoders is an inference –> generative process , first we learn smaller and smaller representations then we start reconstructing them so as the output is similar to the input .

What is an autoencoder

from the Deep Learning Book

An autoencoder is a neural network that is trained to attempt to copy its input to its output. Internally, it has a hidden layer h that describes a code used to represent the input. The network may be viewed as consi sting of two parts: an encoder function h=f(x) and a decoder that produces a reconstruction r=g(h) .

Autoencoders are constrained so not to learn to only copy but rather to construct & deconstruct the input . because it’s constrained by this reduction it is forced to make priorities on which features of the input to learn ? which are useful ? and which are the most important .

This restriction that we impose is in the form of tighter and smaller layers,it’s gradually forced to learn less and less in the encoding phase and more and more in the decoding phase .

- for a more in depth treatment ,I suggest Chapter 14 - Deep Learning Book

Implementation & Training

- the following implementations can be seen in full here

Let’s implement a simple autoencoder in keras ,training it on MNIST .

input_img = Input(shape=(784,))

encoded = Dense(128, activation='relu')(input_img)

encoded = Dense(64, activation='relu')(encoded)

encoded = Dense(32, activation='relu')(encoded)

encoded = Dense(16, activation='relu')(encoded) #the latent layer

decoded = Dense(64, activation='relu')(encoded)

decoded = Dense(128, activation='relu')(decoded)

decoded = Dense(784, activation='sigmoid')(decoded)

autoencoder = Model(input_img, decoded)

autoencoder.compile(optimizer='adadelta', loss='binary_crossentropy')

autoencoder.fit(x_train, x_train,

epochs=100,

batch_size=256,

shuffle=True,

validation_data=(x_test, x_test))

Running the code up will train the network (you can always summarize it using .summary() method in keras)

The upper row pictures shows MNIST the under ones are the reconstructed pictures by the autoencoder you can see clearly how good it can reconstruct data like images .

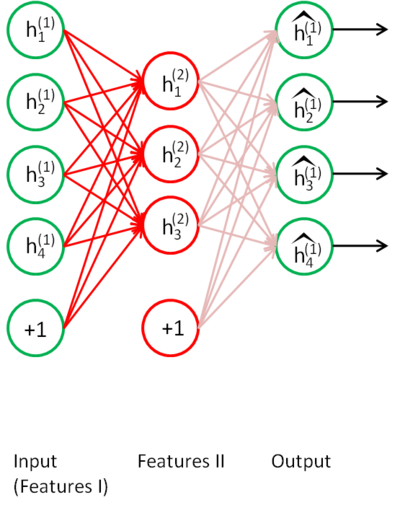

There are more ways to use Autoencoders you can use Variational Autoencoder (Probobalistic),Denoising Autoencoders (Training the network to remove or filter noise (for example gaussian noise on pictures…) but one I want to show you is using them as feature learners and classifiers .

In essence any neural network can be turned to a classifier by adding a Sigmoid or Softmax output layer for Binary or Multi Class problems ,when training the network we train it in a normal manner then we remove the decoder part and simply replace it with a softmax layer .

Make sure to freeze your layers as not to mess with them

Once this change is into effect we train the network for a second time but we keep all the encoder weights frozen so as to only tune the output layer .

This way we can form a classifier that only uses a small set of features you can take it further by actually using it as a feature extractor network for example .

output = Dense(10,activation='softmax')(encoded)

autoencoder = Model(input_img,output)

autoencoder.compile(optimizer='adadelta',loss='categorical_crossentropy',metrics=['accuracy'])

autoencoder.fit(x_train,y_train,epochs=5,batch_size=32)

score = autoencoder.evaluate(x_test, y_test, verbose=0)

print('Test loss:', score[0])

print('Test accuracy:', score[1])

# making a prediction

m = x_val[0]

pred = autoencoder.predict(m.reshape(1,784)

pred = pred.argmax(axis=-1)

after executing this code we get pred is equal to 7 .

You can test this code in countless way ,try reducing the latent space to a denser layer say 4 nodes or just 2